Past research has consistently highlighted the crucial role of dopamine neurons in reward learning. Reward learning is a process through which humans and other animals acquire different information, skills, or behaviors by receiving rewards after performing specific actions, or after providing the ‘correct’/desired response to a question.

When individuals receive rewards that are better than what they expect to receive, dopamine neurons are activated. Contrarily, when the rewards they receive are worse than what they predicted, dopamine neurons are suppressed. This specific pattern of activity resembles what are known as ‘reward prediction errors,’ which are essentially differences between the rewards received and those predicted.

Researchers at University of Pittsburgh have recently carried out a study investigating how the frequency of rewards and reward prediction errors may impact dopamine signals. Their paper, published in Nature Neuroscience, provides new and valuable insight about dopamine-related neural underpinnings of reward learning.

“Reward prediction errors are crucial to animal and machine learning,” William R. Stauffer, Ph.D., one of the researchers who carried out the study, told Medical Xpress. “However, in classical animal and machine learning theories, the ‘predicted rewards’ part of the equation is simply the average value of past outcomes. Although these predictions are useful, it would be much more useful to predict average values, as well as more complex statistics that reflect uncertainty.”

The researchers drew inspiration from a study published in 2005 by Wolfram Schultz, Wellcome Principal Research Fellow, Professor of Neuroscience at the University of Cambridge and Stauffer’s post-doctoral mentor. This 2005 study showed that dopamine reward prediction error responses are normalized according to the standard deviations, which Schultz and colleagues operationalized as the ranges between the largest and smallest outcomes.

“That study was groundbreaking, because it showed that neuronal predictions do, in fact, reflect uncertainty,” Stauffer said “However, there are several different ways to modulate uncertainty, and I suspect they are not psychologically equivalent.”

The range modulation that Schultz and colleagues used in their study (to vary standard deviation) left every potential reward with the same predicted probability.

“We were curious to know how dopamine neurons would respond if the range was constant, but the relative probability of rewards within that range changed,” Stauffer said. “Accordingly, the main objective of our study was to learn whether dopamine neurons were sensitive to the shapes of probability distributions.”

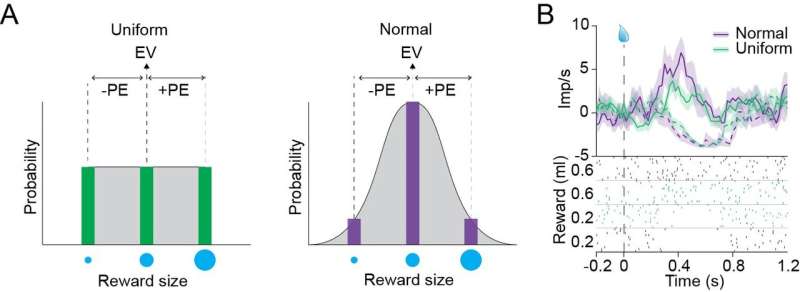

In their experiments, Stauffer and his colleagues used two different visual cues to predict rewards drawn from two different ‘reward probability distributions.’ Both of these virtual distributions contained three types of rewards, namely small, medium, and large juice drops.

One of the reward probability distributions, however, resembled a normal distribution, where the central value (i.e., the medium juice drops) were delivered on most trials, while small and large juice drops were rarely delivered. The second reward probability distribution, on the other hand, followed what is known as a ‘uniform distribution,’ where small, medium and large rewards were delivered with equal probability (i.e., the same number of times).

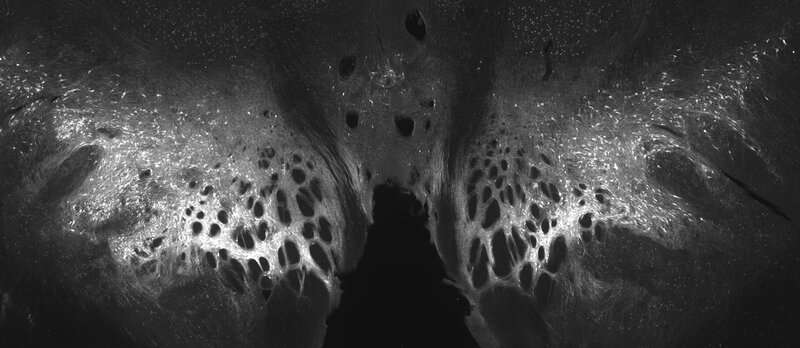

Using electrodes, Stauffer and his colleagues recorded dopamine responses while monkeys were viewing the visual cues associated with rewards from the two different reward probability distributions. They also recorded dopamine responses when the monkeys received rewards ‘drawn’ from the virtual reward probability distributions.

Remarkably, the researchers observed that rewards that were administered with a lower frequency (i.e., rare rewards) amplified dopamine responses in the brains of the monkeys. In comparison, rewards of the exact same size but delivered with greater frequency evoked weaker dopamine responses.

“Our observations imply that predictive neuronal signals reflect the level of uncertainty surrounding predictions and not just the predicted values,” Stauffer said. “This also means that one of the main reward learning systems in the brain can estimate uncertainty, and potentially teach downstream brain structures about that uncertainty. There are few other neural systems where we have such direct evidence of the algorithmic nature of neuronal responses, and these fascinating results indicate a new aspect of that neural algorithm.”

The study carried out by this team of researchers highlights the effects of reward frequency on dopamine responses elicited during reward learning. These findings will inform further studies, which could significantly enhance the current understanding of the neural mechanisms involved in reward learning.

Ultimately, the researchers want to explore how beliefs about probability can be applied to choices made under ambiguity (i.e., when outcome probabilities are unknown). In these specific decision-making scenarios, humans are generally forced to make decisions based on their beliefs about reward probability distributions.

Source: Read Full Article