Stanford researchers have helped solve a longstanding mystery about how brains manage to process information so accurately, despite the fact that individual neurons, or nerve cells, act with a surprising degree of randomness.

The findings, published online on April 2 in the journal Nature, offer new insights into the limits of perception and could aid in the design of so-called neuroprosthetics—devices that enable people to regain some lost sensory capabilities.

In the new study, the researchers measured the activity of neurons in mice brains as the rodents visually discriminated between similar, but not identical imagery. By analyzing data gathered from around 2,000 simultaneously recorded neurons in each mouse, the researchers discovered strong supporting evidence for a theory that perceptual limitations are caused by “correlated noise” in neural activity.

In essence, because neurons are highly interconnected, when one randomly responds incorrectly and misidentifies an image, it can influence other neurons to make the same mistake.

“You can think of correlated noise like a type of ‘groupthink,’ in which neurons can act like lemmings, with one heedlessly following another into making a mistake,” said co-senior author Surya Ganguli, an associate professor of applied physics in the School of Humanities and Sciences (H&S).

Remarkably, the visual system is able to cut through about 90% of this neuronal noise, but the remaining 10% places a limit on how finely we can discern between two images that look very similar.

“With this study, we’ve helped resolve a puzzle that’s been around for over 30 years about what limits mammals—and by extension humans—when it comes to sensory perception,” said co-senior author Mark Schnitzer, a professor of biology and of applied physics in H&S and an investigator at the Howard Hughes Medical Institute.

Watching the watcher

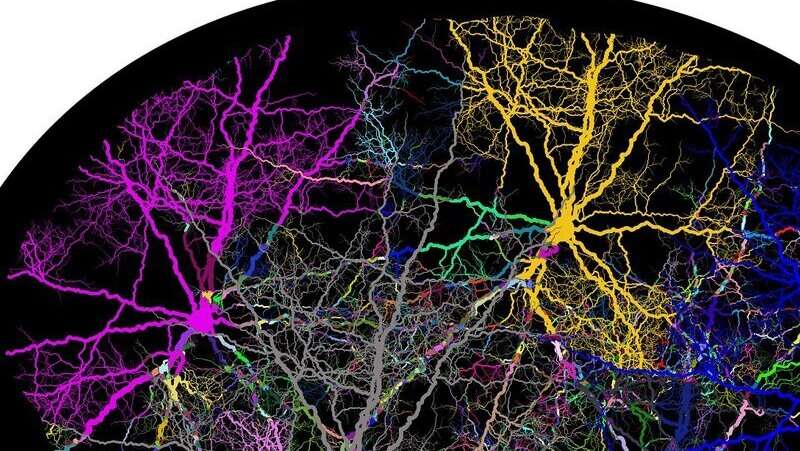

To obtain the huge sample set of a couple thousand neurons per mouse, study lead author Oleg Rumyantsev, a graduate student in applied physics at Stanford, spearheaded construction of a special type of apparatus for brain imaging. Within this experimental setup, a mouse could run in place on a treadmill while scientists used optical microscopy to observe neurons in its primary visual cortex. This brain region is responsible for integrating and processing visual information received from the eye.

The mice in the study were genetically engineered to express sensor proteins that fluoresce and report the levels of activity of neurons in the cortex; when the neurons activate, these proteins give off more light, allowing the researchers to infer the cells’ activity patterns. Sweeping a set of 16 laser beams across the mouse’s visual cortex illuminated the neurons and initiated the fluorescence process, allowing the researchers to watch how the cortical neurons responded to the two different visual stimuli. The presented stimuli were similar-looking images consisting of light and dark bands, known from previous research to really grab murine attention.

Based on how the neurons responded, the researchers could gauge the visual cortex’s ability in distinguishing between the two stimuli. Each stimulus generated a distinct pattern of neuronal response, with many neurons coding for either stimulus 1 or stimulus 2. Fidelity, however, was far from perfect, given neurons’ innate randomness. On some presentations of the visual stimuli, some neurons miscued and signaled the wrong stimulus. Due to the groupthink of correlated noise, when one neuron got it wrong, other neurons sharing common inputs from the mouse’s retina and subsequent parts of the visual circuitry were also more likely to make the same mistake.

It was only possible to uncover the true impact of this correlated noise because the Stanford researchers were able to observe a large set of neurons simultaneously. “Correlated noise only really manifests when you go up to about a thousand neurons, so before our study, it simply was not possible to see this effect,” Ganguli said.

More with less

With regard to visual discrimination tasks, though, the brain still does awfully well in cutting through the sheer volume of neuronal noise. Overall, around 90% of the noise fluctuations did not impede visual signal coding accuracy in the neurons. Instead, only the remaining 10% of correlated noise negatively affected accuracy, and thus limited the brain’s ability to perceive. “The correlated noise does place a bound on what the cortex can do,” said Schnitzer.

The findings suggest that once a suitably large set of neurons (or artificial, neuron-like processing elements) are available, throwing more neurons at a sensory discrimination problem might not substantially boost performance. That insight could help developers of brain prosthetics—the best-known of which is a cochlear implant for the hearing-impaired—learn to achieve more with less. “If you want to build the best possible sensory prosthetic device, you may only need to cue into, say, 1,000, neuron-like elements, because if you try to cue into more, you may not do any better,” said Ganguli.

Source: Read Full Article